Designing for Distress: Balancing Legal, Behavioral Science, and UX in the Lotic Beaconing Project

Lotic Beaconing Case Study | 📍Product design at Lotic.ai

This case study covers the story of how design deals with legal, behavioral science and product team to help Lotic users who are in distress find support and help.

To comply with my non-disclosure agreement, I have omitted and obfuscated confidential information in this case study. All information in this case study is my own and does not necessarily reflect the views of Lotic.ai.

Context

As a wellness company, Lotic.ai is committed to supporting users who may be in distress or at risk of harm. The Clinical Beaconing Project was a vital initiative designed to offer resources when users' language suggests they might pose a threat to themselves or others. This feature plays a critical role in the Lotic.ai platform by directing users in need of help to appropriate external resources. Beyond its importance as a safety measure for users, the functionality also helps safeguard Lotic by fulfilling necessary legal responsibilities.

My role as Lead Designer

As the sole designer, I led the effort to design and implement this feature, collaborating closely with clinical data science, product, engineering, and legal teams. My role was to ensure that the beaconing feature could detect potentially harmful language and provide users with both internal and external resources in a timely and user-friendly manner.

Cross-team Collaboration:

- Clinical Behavioral Science: Suzanne Button, Katie Penry, Anne Park

- Data Science: Gavin Patient

- Legal: Ashton Harper

- Product: Stephanie Padula

- Content: Moody Moral, Laurenne Sala, Justin Toledo

The project had been paused before I took it on, with an existing wireframe designed by another designer. However, when I restarted the effort, I chose to revisit the problem from the ground up, leading the project to completion within 2 months.

The problem

The core problem we aimed to solve was how to provide timely and accessible support to users in distress who might pose a threat to themselves or others. Given the sensitivity of the situation, the solution needed to be simple, easy to understand, and engaging, while avoiding complex user flows, complicated UI design, long blocks of text, and difficult legal terms or language that could overwhelm users in distress.

Thus here is the major challenge of this project:

How can we transform complex, dry legal language into a simple, approachable, and even engaging design that allows a person in distress to easily seek help and take action, while still adhering to legal regulations?

Research and interviews

Despite the presence of earlier wireframes, I chose to start with research to understand the problem deeply before jumping into design. I organized rounds of meetings with major stakeholders (clinical behavioral science, data science, product, legal and engineering team) to deeply understand the problem and the technology behind.

a, interview with the data science team: understand the NLP technology

The data science team provided valuable insights into how Natural Language Processing (NLP) would be used to identify harmful words in users’ audio recordings. Initially, the approach involves hard-coding a predefined list of harmful words, which will trigger the workflow directing users to a list of external, supportive resources. This method was chosen for its ability to be deployed quickly. However, as Lotic's Wisdom Engine evolves, the data science team plans to explore more advanced and nuanced approaches for detecting and flagging risk, allowing for a more sophisticated and flexible system in the future.

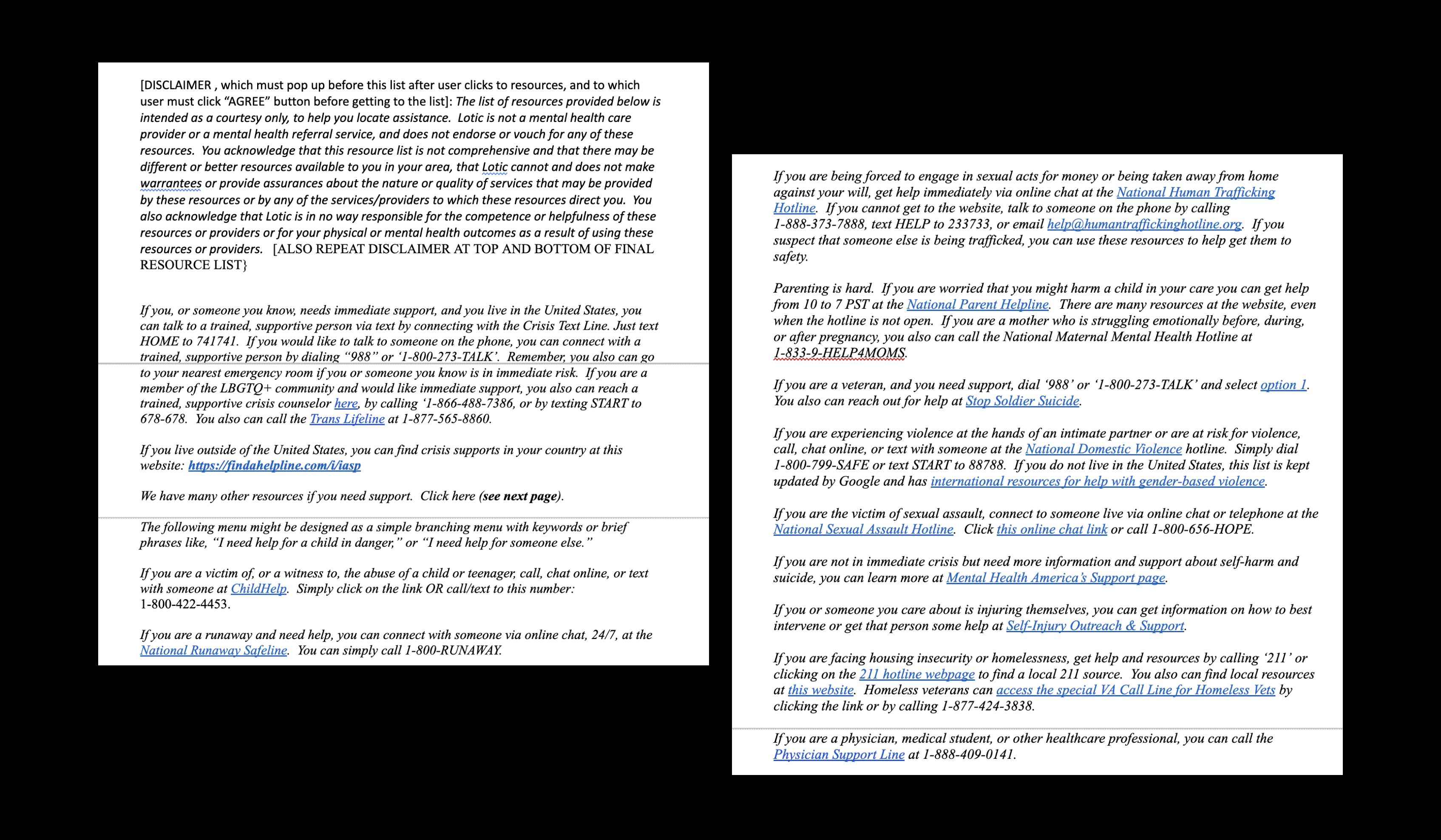

b, interview with the legal team: deal with the legal compliance and challenge

Discussions with the legal team were challenging, as they were keen on maintaining the language and steps outlined in the existing wireframe, despite it being long, difficult to understand, and involving too many steps. It initially seemed that there was little flexibility to push for changes from a legal standpoint. However, from a user experience perspective, the design needed significant improvement. My challenge was to find a way to strike a balance between legal requirements and creating a more user-friendly design.

Here is a glimpse of the legal requirement, including disclaimer info, resources info etc. which is long, disorganized and hard to understand.

c, interview with the clinical behavioral science team: optimize the UX copy

The clinical behavioral science team played a key role in providing the UX copy for the design, primarily by compiling a comprehensive list of resources, including contact information, in a shared Google document. We had several discussions to determine which information was essential, what could be omitted, and how the content could be improved to better serve the users.

d, user interviews

Due to the sensitivity of this project, we did not conduct user interviews, as it was difficult to ethically recruit participants who might be in distress or at risk. However, we employed alternative methods for user testing, which I will elaborate on later.

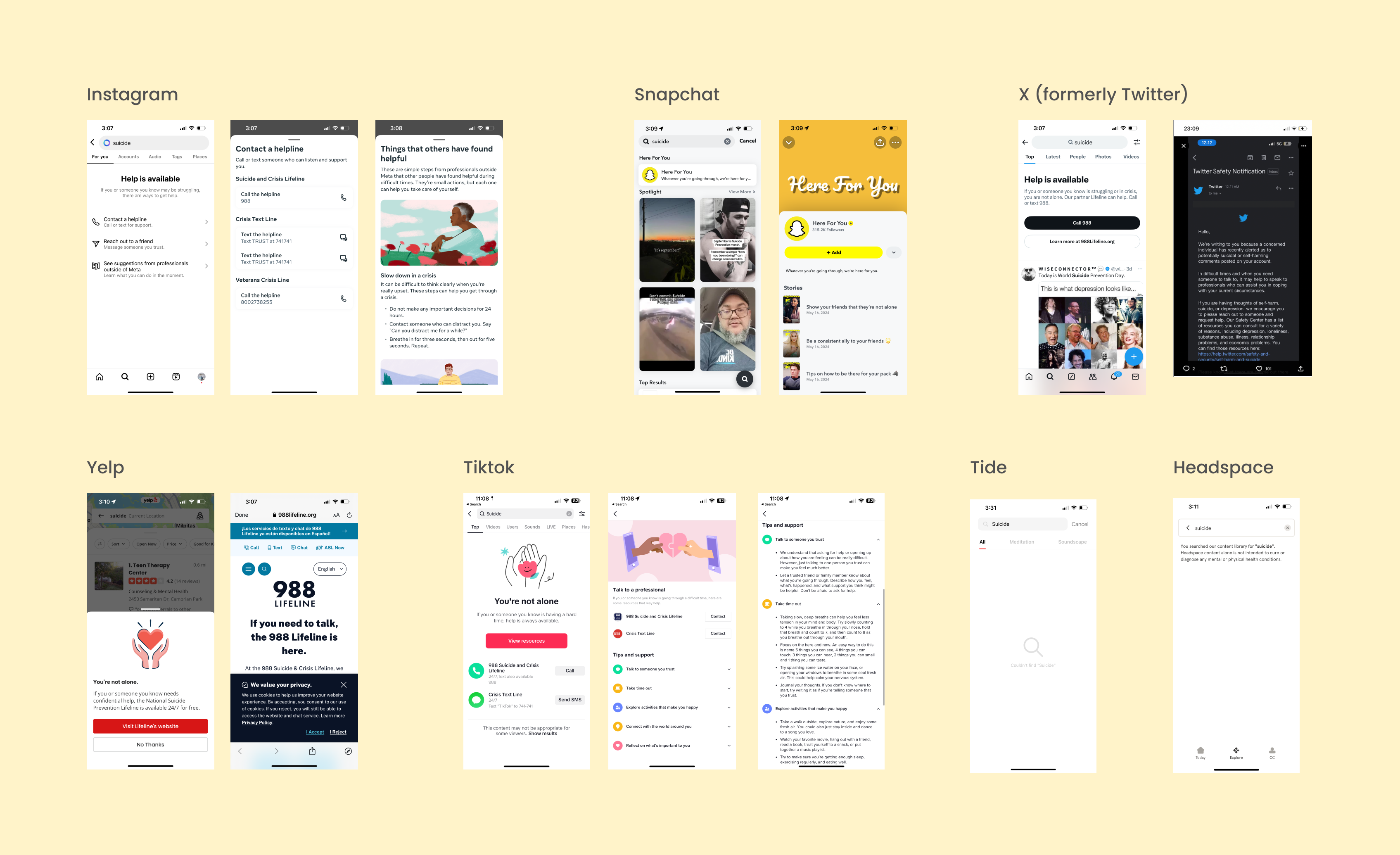

Competitor analysis

To better understand how the industry provides support to users in need, I conducted a competitor analysis of popular apps such as Instagram, X (formerly Twitter), TikTok, Yelp, Snapchat, and mental wellness apps like Tide and Headspace. Surprisingly, while all major social media apps offer external resources when users express self-harm tendencies, the mental wellness apps did not provide any such resources.

Key findings from the competitor analysis include:

- The beaconing feature in social media apps is designed to be straightforward, with prominent call-to-action buttons that make it easy for users to access help.

- The language used is concise and to the point, avoiding unnecessary wording or distractions.

- Visual elements, such as calming graphics, are used to help soothe users' emotions and create a sense of warmth and support.

- Light mode design is commonly used when displaying resources, contributing to a clean and approachable interface.

- Some apps, like Snapchat, offer video content from their own platforms to provide assistance.

- Additional methods, such as sending alerts via email, are employed to further support users in distress.

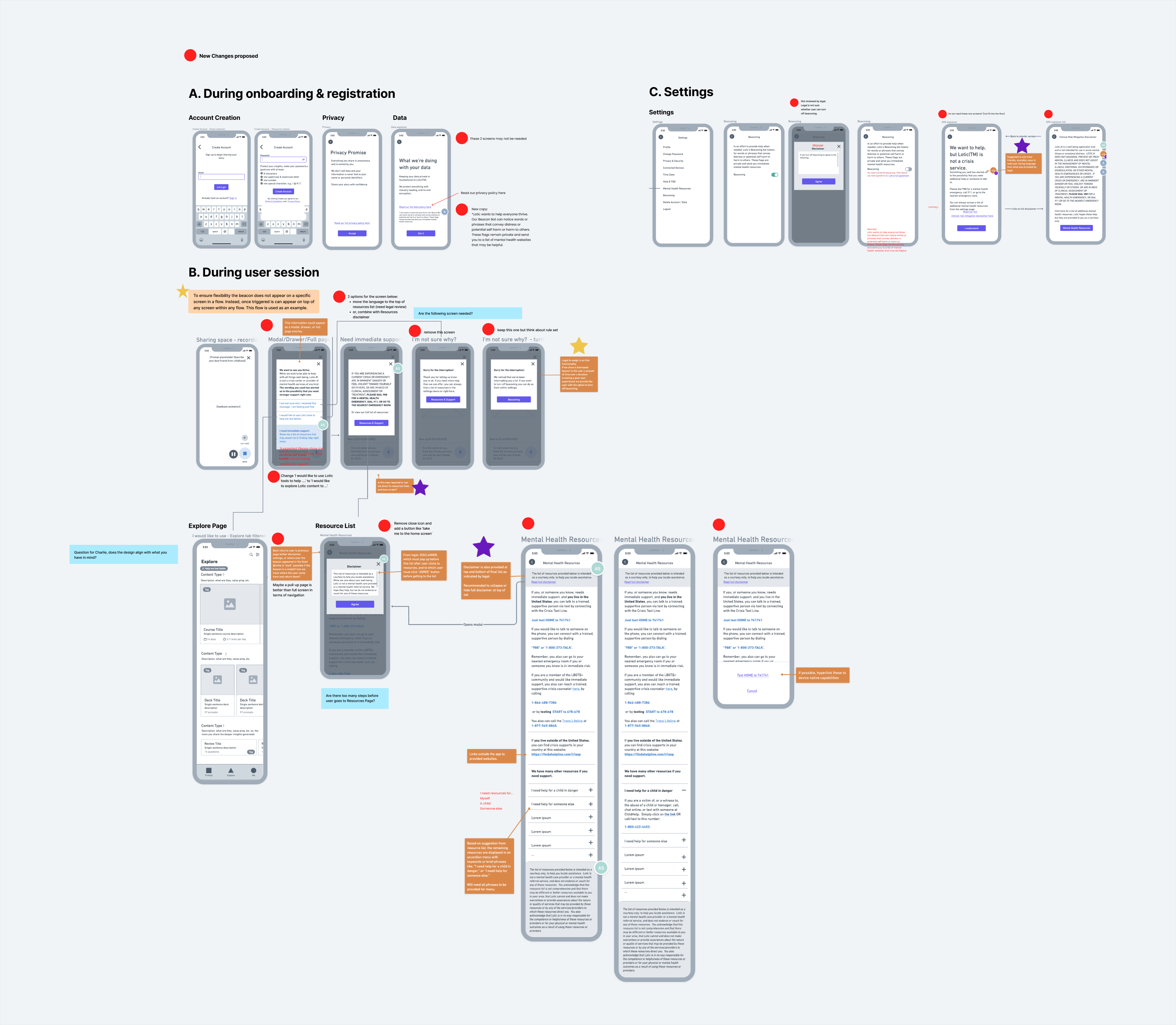

Reassess the existing wireframe

Unlike most projects that start from scratch, the design of the beaconing feature had already been initiated by another designer but was paused due to business concerns. When I took over the project, there were existing wireframes and ongoing conversations to consider.

In addition to my independent research, I organized workshops to audit the existing wireframe in collaboration with the legal and behavioral science teams. These workshops provided an opportunity to raise my concerns, particularly with the legal team, and propose ideas to simplify the user flow and improve the overall design.

Our discussions focused on several key topics:

- Can we streamline the user flow by removing certain screens?

- Can we simplify the flow by combining specific screens?

- How can we make the text shorter and easier to understand?

- Can we rearrange the content to present it more effectively?

- What are the minimum legal requirements that we must adhere to?

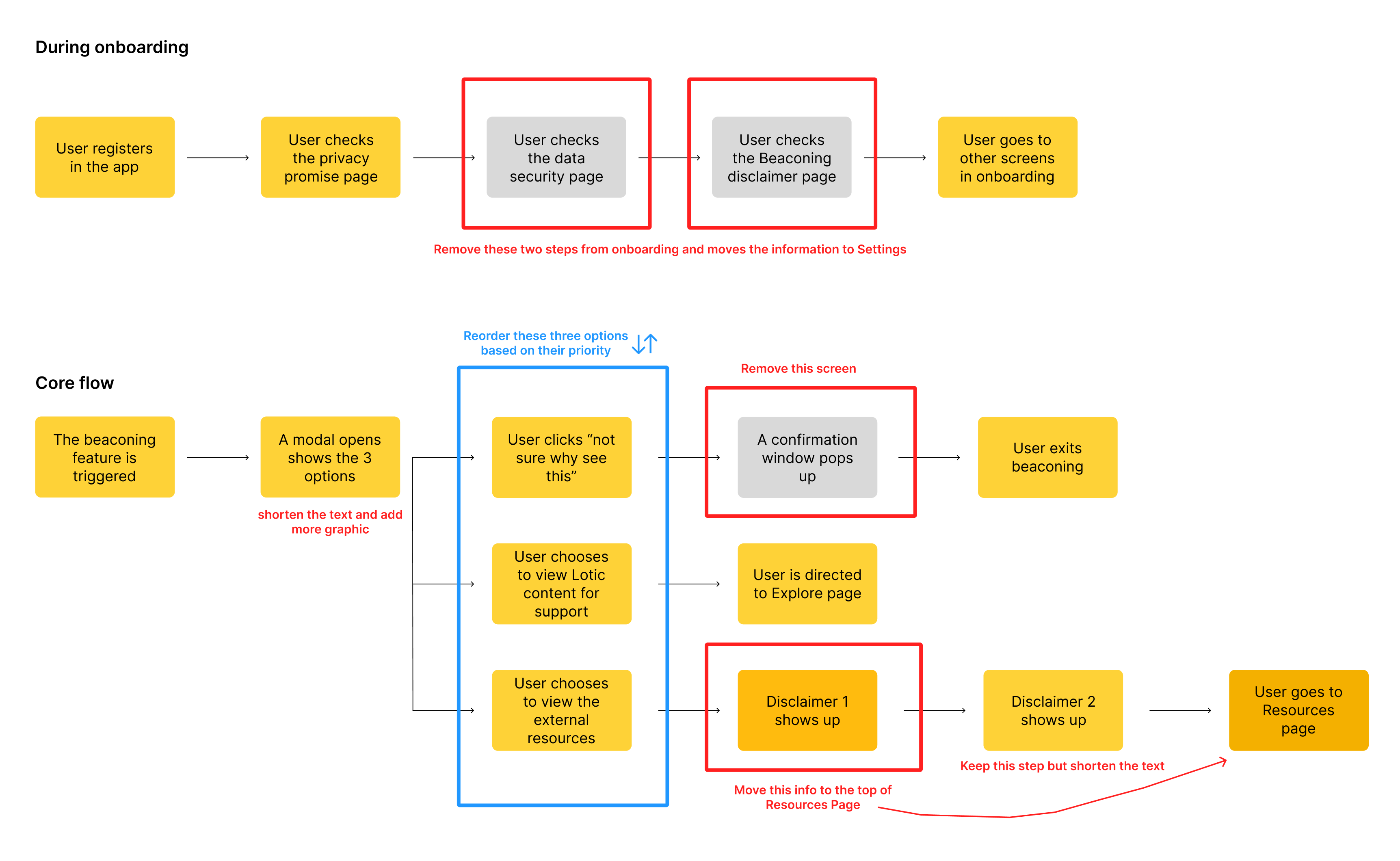

User flow improvement

Here are some new changes reflected in the user flow:

Design and prototype

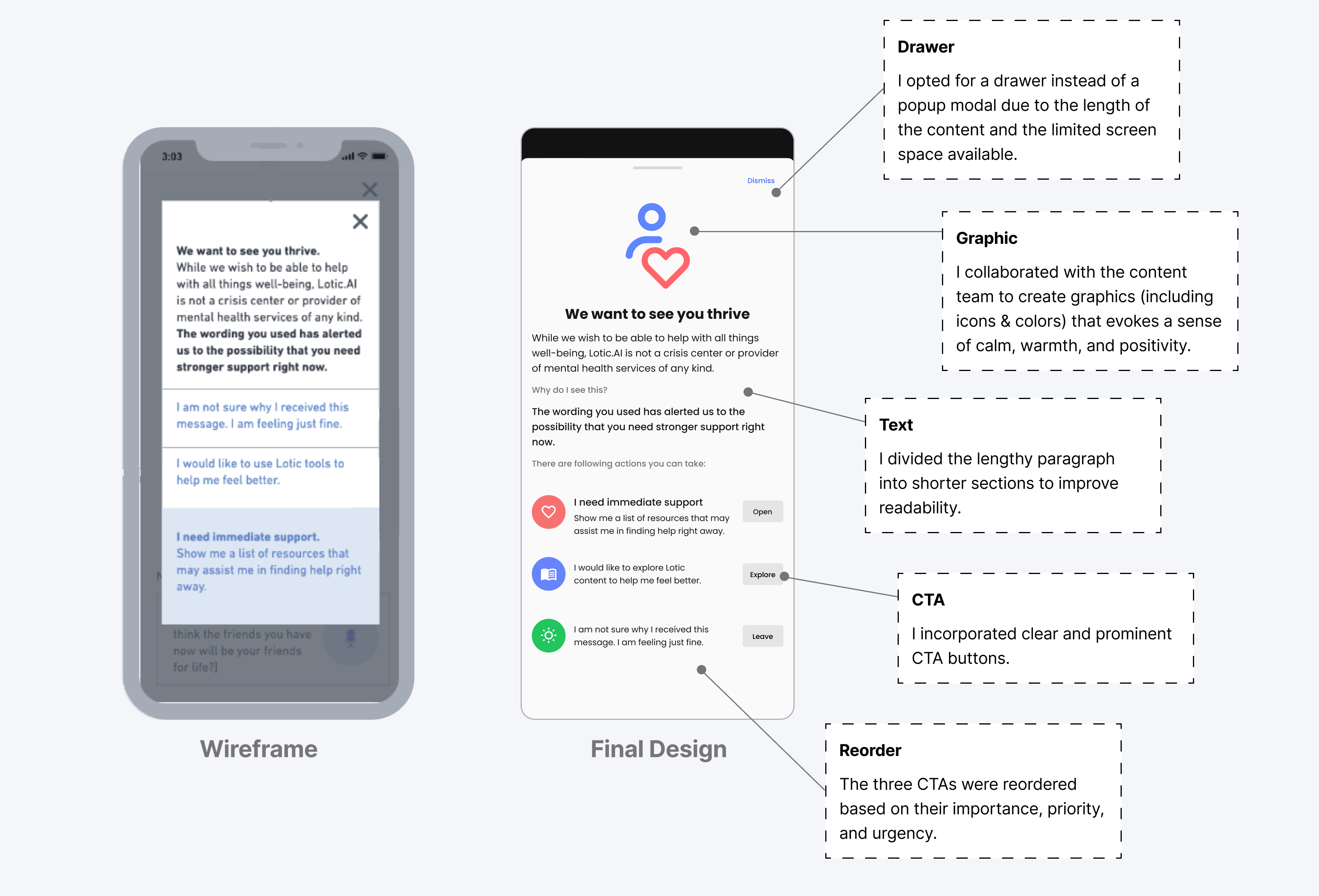

a, If the system detects potentially harmful words or phrases, the beaconing feature is activated. For the design of this page, my goal was to create a feeling that evokes calm, warmth, and positivity.

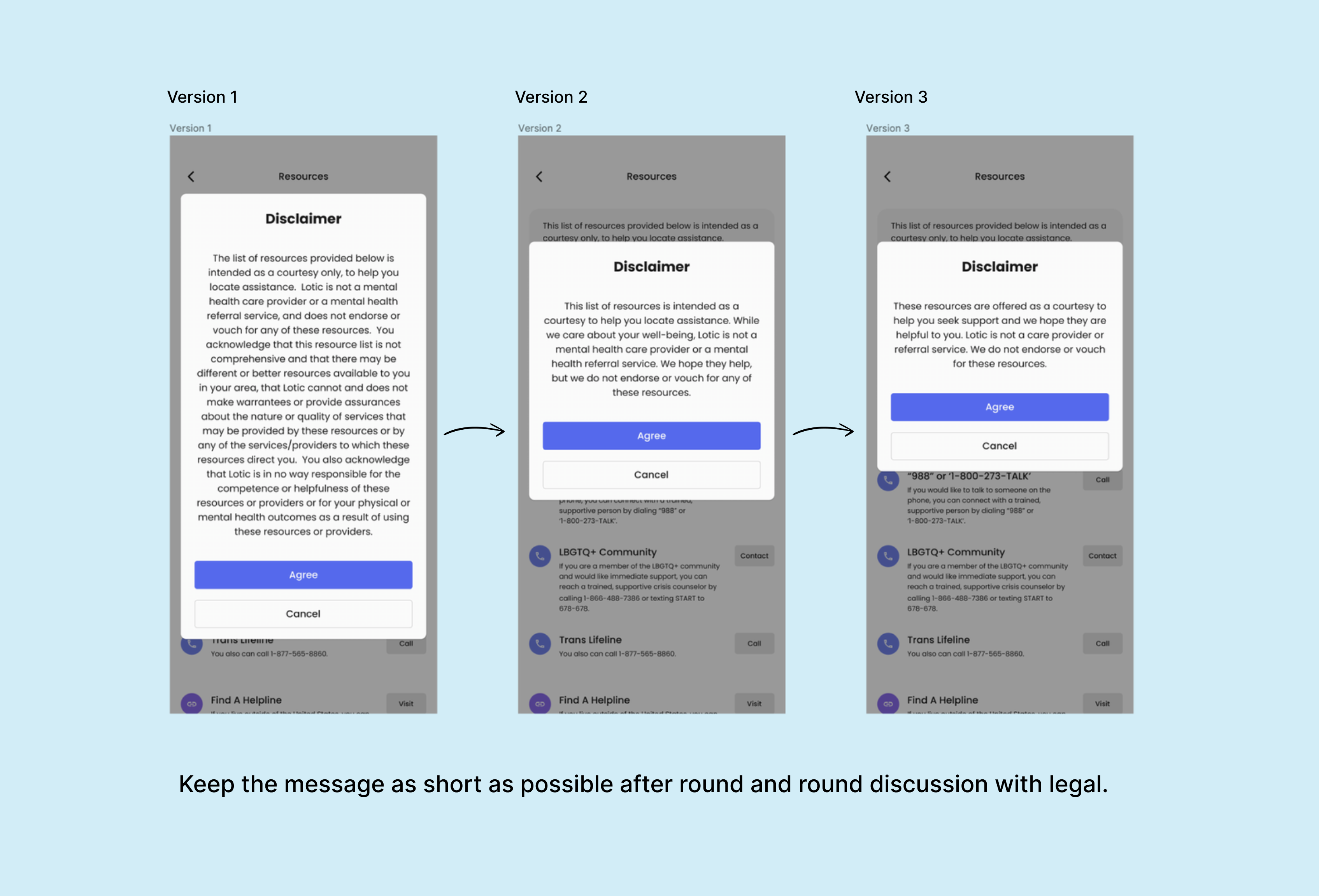

b, Long blocks of text can severely impact the user experience, especially for individuals in distress who may not have the time or capacity to read through lengthy content. One of the key priorities in designing the beaconing feature was to shorten the text and improve readability. The goal was to ensure that users wouldn't feel overwhelmed by excessive information, allowing them to focus on getting the help they need. This approach was also supported by insights from the research I conducted.

The image below illustrates how I progressively reduced the text length through multiple rounds of discussions with the legal team.

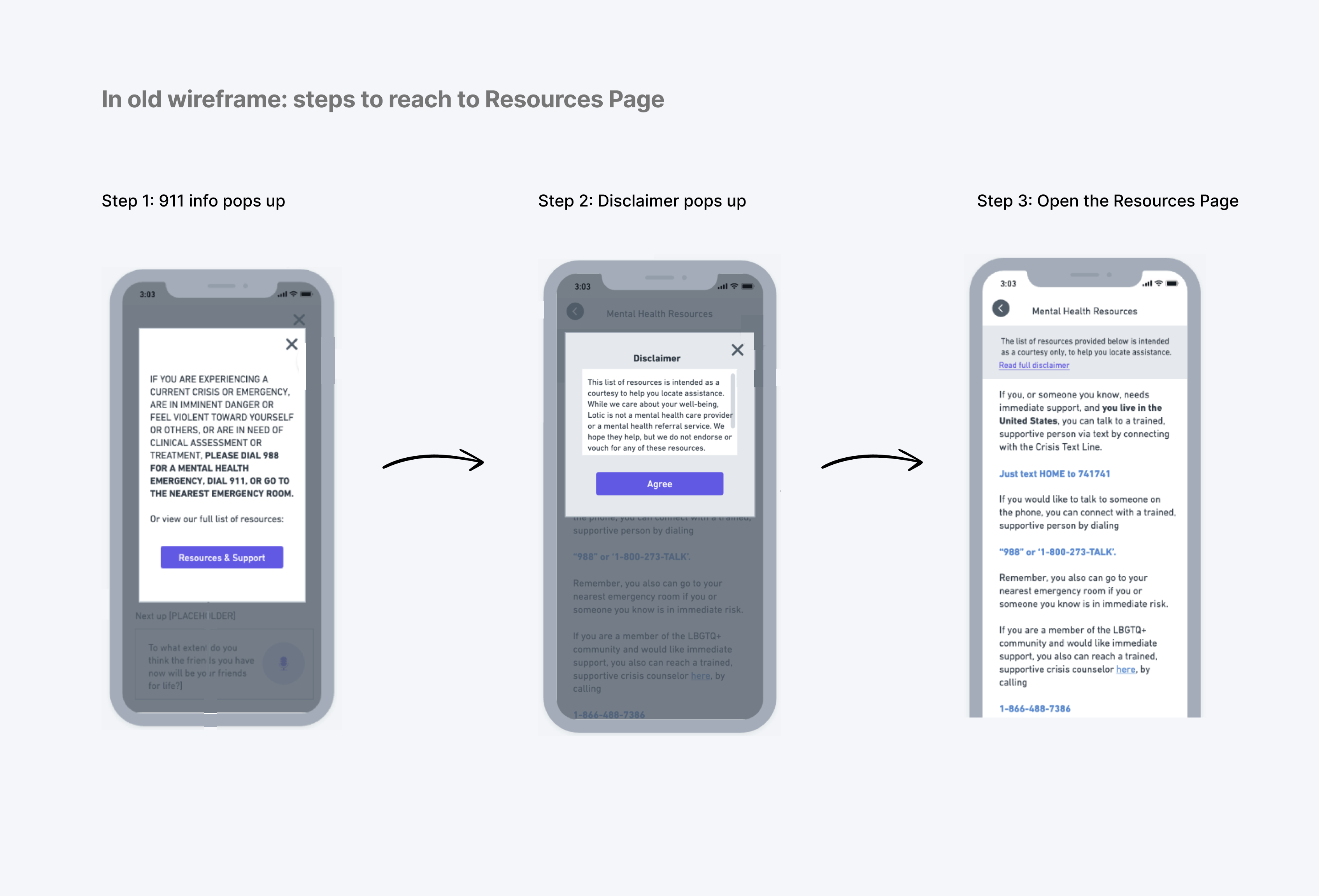

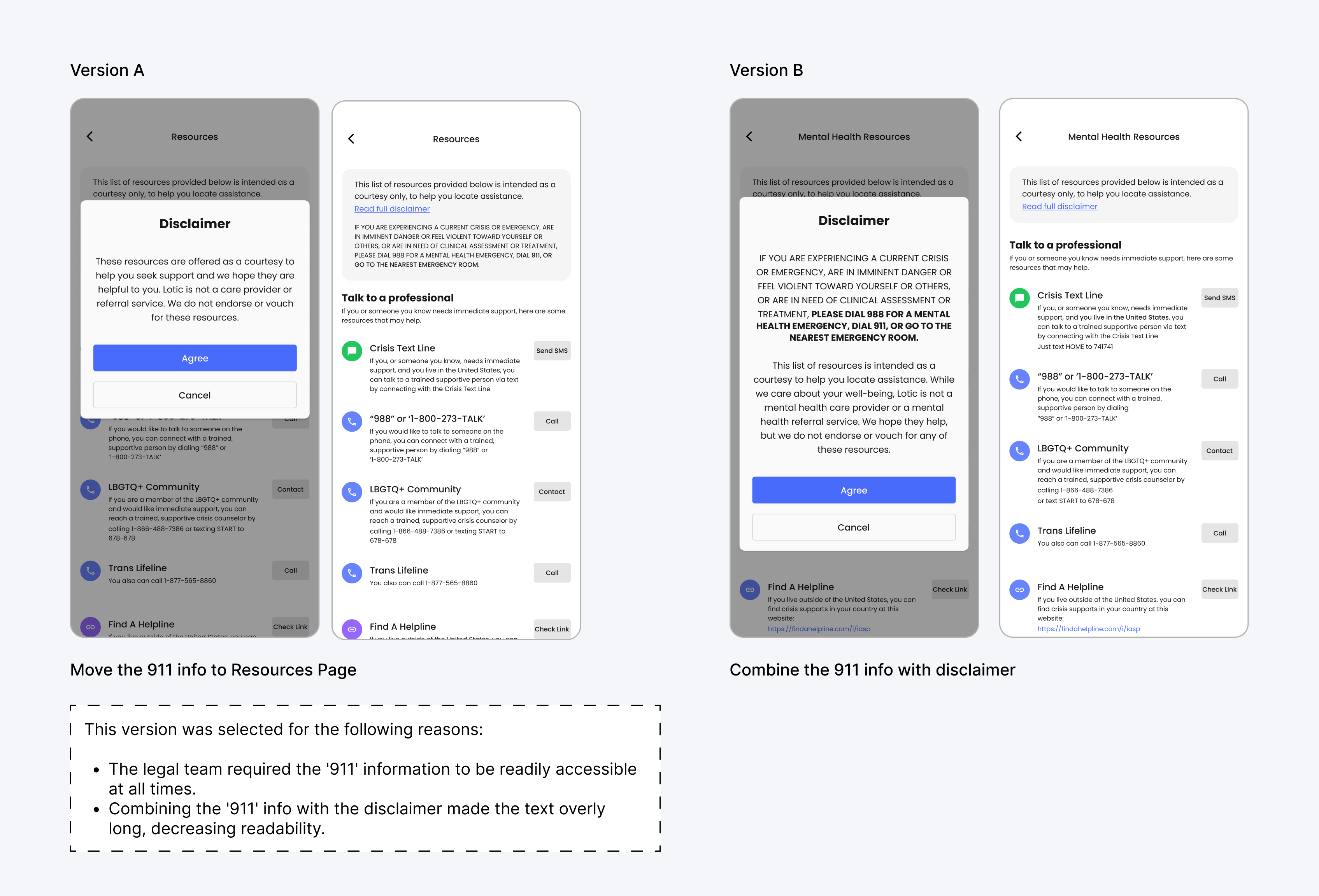

c, The wireframe initially presented a flow with too many steps, so I explored ways to simplify it while still meeting legal requirements. I designed two versions:

- Version A moved the '911' information to the top of the Resources Page, reducing the number of steps in the flow.

- Version B combined the '911' information with the disclaimer, also streamlining the flow.

After comparing the two options, we chose Version 1 for the following reasons:

- The legal team required the '911' information to be readily accessible at all times.

- Combining the '911' info with the disclaimer made the text overly long, decreasing readability.

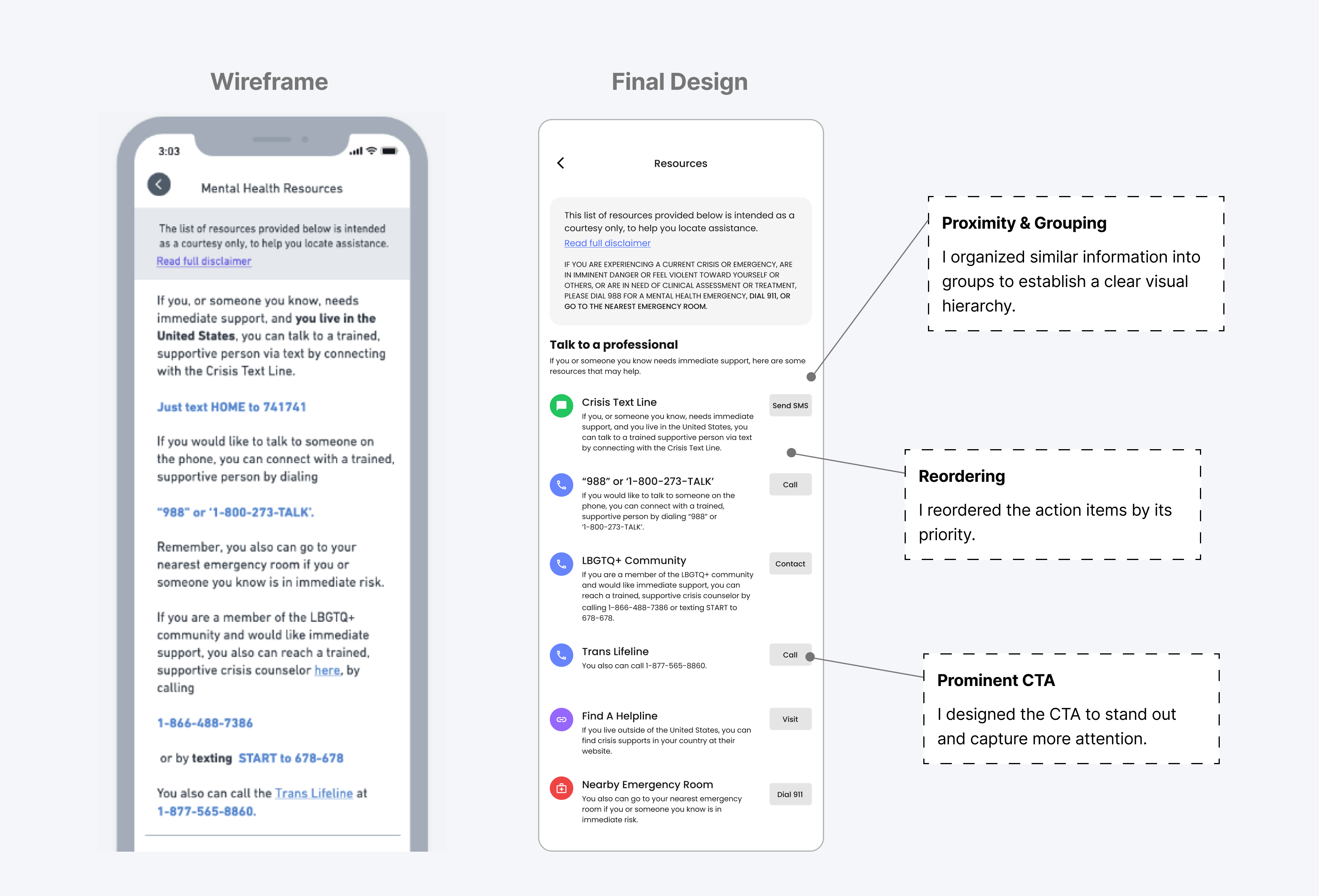

d, The Resources Page is a key element of the beaconing feature, as it's where users can access immediate support and assistance. The goal in designing this page was to make it well-organized, easy to read, and quick for users to find the help they need.

To design the Resources Page, I made decisions grounded in both design principles and research:

I applied the principle of proximity, which emphasizes grouping related elements to create a more organized and intuitive layout. By clustering similar contact options and resources, I made it easier for users to quickly understand the relationships between them and locate the support they need.

Additionally, my design was informed by the competitor analysis I conducted. For example, I noticed that social media platforms like Instagram and TikTok feature prominent, easy-to-access CTA buttons. This inspired me to make each contact button a focal point on the Resources Page, ensuring that users in distress could quickly access help without navigating a complicated interface.

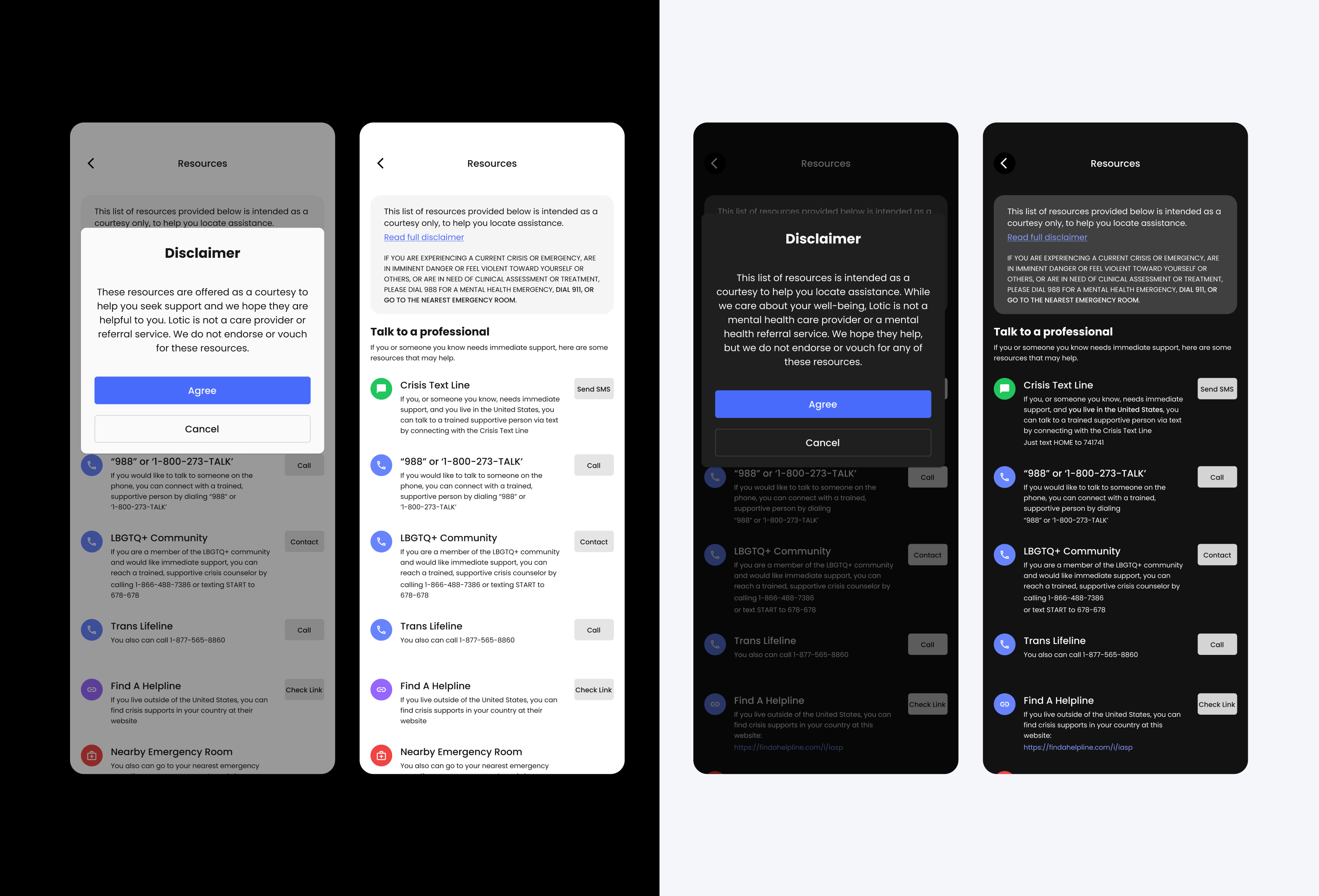

e, I designed two versions of the Resources Page: dark mode and light mode, but initially, I wasn't sure which one to choose. Since the Lotic app primarily uses dark mode, keeping the Resources Page consistent with that design seemed logical. However, dark mode can make reading slightly more difficult and may evoke negative emotions. From my competitor analysis, I also discovered that light mode is commonly used for pages offering help and support. Taking this into account, I decided to go with the light mode for a more positive and accessible experience.

User testing limitations and adaptations

Given the sensitive nature of the beaconing feature, it was difficult to ethically recruit participants who were currently in distress for testing. This posed a challenge in accurately gauging how users under significant emotional strain would interact with the design. To adapt, I employed alternative testing methods to gather insights while maintaining ethical considerations.

One approach was using proxies, where we tested the feature with users who were not in immediate distress but could provide valuable feedback on the interface's clarity and ease of navigation. These participants were asked to imagine themselves in stressful situations, which allowed us to assess how intuitive the user flow was and how quickly users could locate help.

Additionally, we ran usability tests with users unfamiliar with the app to simulate how a first-time or distressed user might navigate the interface. By focusing on how easily participants could understand the prompts and complete the necessary steps, we gathered insights into potential pain points or confusion.

Finally, feedback from stakeholders, including the clinical behavioral science team, was invaluable in shaping the final design. Their expertise provided insights into how users in distress might react to certain elements, such as long blocks of text or complex navigation, and helped us adjust the design accordingly.

Although we couldn't fully replicate the emotional state of distressed users, this approach allowed us to improve the feature's usability while maintaining ethical standards.

Learning and reflection

This project marked my first time working closely with the legal team. Initially, there was significant resistance from the legal side regarding requests to reduce text length and adjust the user flow, due to legal requirements and compliance concerns. However, through open and effective communication, we were able to collaborate and find a solution that balanced both user experience and legal regulations. This experience made me learn the critical importance of communication, particularly when working within cross-functional teams and taught me how essential it is to align diverse perspectives to achieve a successful outcome.

Thank you for taking the time to read this case study. I’m happy to answer any questions or discuss further. Feel free to contact me via email or LinkedIn.